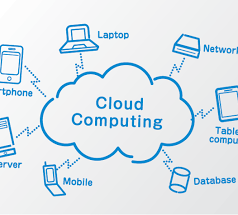

Remember when software came in a box, installed on hefty desktop towers? Today, we live in a different world, where the cloud is the backbone of how we interact with technology.

Cloud computing emerged from a desire for efficiency and accessibility. It started with sharing powerful servers in remote data centers, accessible to anyone with an internet connection. This challenged the traditional IT approach, where businesses invested heavily in their own hardware and software. Instead, you could rent these resources, scaling up or down with remarkable agility. This “pay-as-you-go” model was a game-changer, especially for businesses, allowing them to innovate faster, deploy services with unprecedented speed, and operate at a significantly lower cost. It democratized access to enterprise-grade computing power, enabling startups to compete without massive upfront investments.

Cloud Computing Today: Everywhere You Look

Today, cloud computing is so deeply integrated into our lives that we often don’t even realize it. Your favorite streaming service – Netflix, Spotify, YouTube – is the cloud delivering movies and music. Online collaboration tools like Google Workspace, Microsoft 365, and Slack rely entirely on the cloud for real-time editing and communication. Social media platforms like Facebook, Instagram, and X process billions of interactions daily, powered by immense cloud infrastructures. Even many smartphone apps, from navigation to mobile banking, leverage cloud services for data storage and functionality.

Beyond consumer applications, businesses of all sizes rely on cloud services for everything from basic data storage and backup to complex data analytics, machine learning, and artificial intelligence. Customer relationship management (CRM) systems like Salesforce and enterprise resource planning (ERP) software like SAP are increasingly delivered as Software as a Service (SaaS) from the cloud. This allows companies to focus on their core competencies rather than managing complex IT systems. It’s the invisible force that keeps our digital world spinning.

What Does the Future Hold?

The rise of the cloud has led to profound questions about the future of traditional hardware. Will our personal computers become little more than glorified terminals, thin clients connecting to powerful cloud-based applications? For many tasks, this is already happening. Lightweight, cost-effective devices like Chromebooks are gaining popularity because they leverage the cloud for processing power, application delivery, and data storage. Users access powerful applications and vast storage without needing high-spec local hardware.

While physical hardware won’t disappear entirely – we’ll always need something to interact with the cloud – its role is evolving. The focus may shift from raw local processing power and large internal storage to connectivity, battery life, and specialized sensors that feed data to the cloud. We might see a future where powerful computing is something you subscribe to, a utility you access as needed, rather than a high-cost asset you own. This shift could lead to more affordable and accessible technology, and potentially a more sustainable technological landscape. Edge computing, where processing occurs closer to the data source (e.g., smart sensors, IoT devices) to reduce latency, will also play a crucial role, often complementing cloud infrastructure.

A particularly interesting evolution is the future of specialized hardware like Graphics Processing Units (GPUs). Historically, GPUs have been essential for graphically intensive tasks like gaming, video editing, and AI/machine learning. However, with cloud gaming services (like Xbox Cloud Gaming, GeForce NOW) and cloud-based AI/ML platforms (like Google Cloud AI Platform, AWS SageMaker), the need for a powerful local GPU diminishes for the end-user. The heavy lifting is offloaded to powerful GPU farms in the cloud. While GPUs will continue to exist and evolve within these cloud data centers, for remany consumers, the days of needing to buy and upgrade an expensive, power-hungry GPU for their personal machine may be numbered. Instead, they’ll simply subscribe to a service that streams the graphical output or provides access to the necessary computational power on demand.

A Cloud of Protection: Software Piracy

One of the more impactful side effects of cloud computing is its inherent potential to significantly combat software piracy. When software is delivered as a service (SaaS) from the cloud, the traditional model of purchasing a license and installing a static copy changes fundamentally. Instead, you’re accessing the software remotely, and your access is actively managed and authenticated by the cloud service provider. The software code itself never truly resides on your local machine in a way that can be easily duplicated or reverse-engineered for unauthorized distribution.

This makes it exceedingly difficult to create and distribute unauthorized copies. Access to the software is controlled by user accounts, subscriptions, and real-time authentication checks. If you don’t have a valid, active subscription, you simply can’t log in and use the software. This model eliminates the need for product keys or complex licensing schemes that were often vulnerable to cracking. For example, popular Adobe software like Photoshop and Illustrator, which were once widely pirated through cracked license keys, are now predominantly accessed through cloud-based Creative Cloud subscriptions. This makes it far more difficult for unauthorised users to gain access, as their authentication is constantly verified by Adobe’s servers.

This inherent security measure protects intellectual property far more effectively than

traditional on-premise software. This shift from “owning” a copy of software to “subscribing” to a service offers a powerful mechanism for software developers and publishers to protect their intellectual property, ensure they are fairly compensated for their work, and ultimately foster continued innovation in the software industry by providing a more stable revenue stream.

The cloud isn’t just a technological trend; it’s a fundamental shift in how we build, deploy, and consume software and, increasingly, how we interact with hardware. It’s a journey that’s far from over, promising even more innovative, integrated, and pervasive digital experiences in the years to come, shaping everything from global business to our daily personal lives.